io.net, a Solana-based decentralized AI computing platform developed by IO Research, has reached a $1 billion FDV valuation in its latest round of financing. io.net announced in March that it had completed a $30 million Series A financing round led by Hack VC, which also included participation from Multicoin Capital, 6th Man Ventures, Solana Ventures, OKX Ventures, Aptos Labs, Delphi Digital, The Sandbox, and Sebastian Borget of The Sandbox. io.net focuses on aggregating GPU resources for AI and machine learning companies, and is committed to providing services at lower costs and faster delivery times. Since its launch in November last year, io.net has grown to more than 25,000 GPUs and processed more than 40,000 computing hours for AI and machine learning companies.

io.net's vision is to build a global decentralized AI computing network, establishing an ecosystem between AI and machine learning teams/enterprises and powerful GPU resources around the world.

In this ecosystem, AI computing resources become commoditized, and both supply and demand sides will no longer be troubled by the lack of resources. In the future, io.net will also provide access to IO model stores and advanced reasoning capabilities, such as serverless reasoning, cloud gaming, and pixel streaming services.

Business Background

Before introducing the business logic of io.net, we must first understand the decentralized computing power track from two dimensions: one is the development history of AI computing, and the other is to understand the cases that also used decentralized computing power in the past.

The development of AI computing

We can depict the development trajectory of AI computing from several key time points:

1. The early days of machine learning (1980s - early 2000s)

During this period, machine learning methods mainly focused on relatively simple models, such as decision trees, support vector machines (SVMs), etc. The computing requirements of these models were relatively low and could be run on personal computers or small servers at the time. The data sets were relatively small, and feature engineering and model selection were key tasks.

Time point: 1980s to early 2000s

Computing power requirements: relatively low, personal computers or small servers can meet the needs.

Computing hardware: CPU dominates computing resources.

2. The rise of deep learning (2006-recently)

In 2006, the concept of deep learning was reintroduced, and this period was marked by the research of Hinton and others. Subsequently, the successful application of deep neural networks, especially convolutional neural networks (CNNs) and recurrent neural networks (RNNs), marked a breakthrough in this field. The demand for computing resources increased significantly during this stage, especially when processing large data sets such as images and speech.

Time point:

ImageNet competition (2012): AlexNet's victory in this competition was a landmark event in the history of deep learning. It demonstrated for the first time the great potential of deep learning in the field of image recognition.

AlphaGo (2016): Google DeepMind's AlphaGo defeated the world Go champion Lee Sedol. This should be the most brilliant moment of AI so far. This not only demonstrated the application of deep learning in complex strategy games, but also proved to the world its ability to solve highly complex problems.

Computing power requirements: significantly increased, requiring more powerful computing resources to train complex deep neural networks.

Computing hardware: GPUs began to become the key hardware for deep learning training because they are far better than CPUs in parallel processing.

3. The era of large language models (2018 to present)

With the emergence of BERT (2018) and GPT technology (after 2018), large models began to dominate the AI track. These models usually have billions to trillions of parameters, and the demand for computing resources has reached an unprecedented level. Training these models requires a large number of GPUs or more specialized TPUs, and requires a lot of power and cooling facilities.

Time point: 2018 to present.

Computing power requirements: extremely high, requiring a large number of GPUs or TPUs to form scale and support with corresponding infrastructure.

Computing hardware: In addition to GPUs and TPUs, dedicated hardware optimized for large machine learning models has begun to appear, such as Google's TPU, Nvidia's A and H series, etc.

From the exponential growth of AI's demand for computing power in the past 30 years, the demand for computing power in early machine learning was relatively low, the deep learning era increased the demand for computing power, and AI large models further pushed this demand to the extreme. We have witnessed a significant improvement in computing hardware from quantity to performance.

This growth is not only reflected in the expansion of the scale of traditional data centers and the improvement of hardware performance such as GPUs, but also in the high investment threshold and rich return expectations, which are enough to make the fight between Internet giants public.

The initial investment of traditional centralized GPU computing centers requires expensive hardware purchases (such as GPUs themselves), data center construction or rental costs, cooling systems, and maintenance personnel costs.

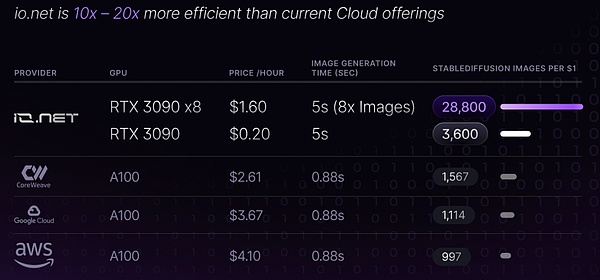

In contrast, the decentralized computing platform project established by io.net has obvious advantages in terms of construction costs, which can significantly reduce initial investment and operating costs, and create possibilities for small and micro teams to build their own AI models.

The decentralized GPU project utilizes existing distributed resources and does not require centralized investment in hardware and infrastructure construction. Individuals and enterprises can contribute idle GPU resources to the network, reducing the need for centralized procurement and deployment of high-performance computing resources.

Secondly, in terms of operating costs, traditional GPU clusters require continuous maintenance, electricity and cooling costs. Decentralized GPU projects can distribute these costs to each node by utilizing distributed resources, thereby reducing the operating burden of a single organization.

According to io.net's documentation, io.net greatly reduces operating costs by aggregating underutilized GPU resources from independent data centers, cryptocurrency miners, and other hardware networks such as Filecoin and Render. Coupled with Web3's economic incentive strategy, io.net has a great advantage in pricing.

Decentralized Computing

Looking back at history, there have indeed been some decentralized computing projects that have achieved remarkable success in the past. Without economic incentives, they still attracted a large number of participants and produced important results. For example:

Folding@home: This is a project initiated by Stanford University that aims to simulate the protein folding process through distributed computing to help scientists understand the mechanism of diseases, especially diseases related to improper protein folding such as Alzheimer's disease and Huntington's disease. During the COVID-19 epidemic, the Folding@home project gathered a huge amount of computing resources to help study the new coronavirus. BOINC (Berkeley Open Infrastructure for Network Computing)**: This is an open source software platform that supports various types of volunteer and grid computing projects, including astronomy, medicine, climate science and other fields. Users can contribute idle computing resources and participate in various scientific research projects. These projects not only prove the feasibility of decentralized computing, but also show the huge development potential of decentralized computing. By mobilizing all sectors of society to contribute unused computing resources, computing power can be significantly enhanced. If the economic model of Web3 is innovatively added, greater cost-effectiveness can be achieved economically. Web3 experience shows that a reasonable incentive mechanism is important for attracting and maintaining user participation. By introducing an incentive model, a community environment of mutual assistance and win-win can be built, which can further promote the expansion of business scale and promote technological progress in a positive cycle.

Therefore, io.net can attract a wide range of participants to contribute computing power and form a powerful decentralized computing network through the introduction of incentive mechanisms.

The economic model of Web3 and the potential of decentralized computing power provide a strong driving force for the growth of io.net and achieve efficient resource utilization and cost optimization. This not only promotes technological innovation, but also provides value to participants, which can make io.net stand out in the competition in the field of AI and have huge development potential and market space.

io.net Technology

Cluster

GPU cluster refers to complex computing that connects multiple GPUs through a network to form a collaborative computing cluster. This method greatly improves the efficiency and ability to handle complex AI tasks.

Cluster computing not only speeds up the training of AI models, but also enhances the ability to process large-scale data sets, making AI applications more flexible and scalable.

In the process of training AI models in traditional Internet, large-scale GPU clusters are required. However, when we consider shifting this cluster computing model to decentralization, a series of technical challenges emerge.

Compared with the AI computing clusters of traditional Internet companies, decentralized GPU cluster computing will face more problems, such as: nodes may be distributed in different geographical locations, which brings network latency and bandwidth limitations, which may affect the synchronization speed of data between nodes, thereby affecting the overall computing efficiency.

In addition, how to maintain the consistency and real-time synchronization of data between nodes is also crucial to ensure the accuracy of the calculation results. Therefore, this requires decentralized computing platforms to develop efficient data management and synchronization mechanisms.

How to manage and schedule distributed computing resources to ensure that computing tasks can be completed effectively is also a problem that decentralized cluster computing needs to solve.

io.net has built a decentralized cluster computing platform by integrating Ray and Kubernetes.

As a distributed computing framework, Ray is directly responsible for executing computing tasks on multiple nodes. It optimizes the data processing and machine learning model training process to ensure that tasks run efficiently on each node.

Kubernetes plays a key management role in this process. It automates the deployment and management of container applications and ensures that computing resources are dynamically allocated and adjusted according to demand.

In this system, the combination of Ray and Kubernetes realizes a dynamic and elastic computing environment. Ray ensures that computing tasks can be efficiently executed on appropriate nodes, while Kubernetes ensures the stability and scalability of the entire system and automatically handles the addition or removal of nodes.

This synergy enables io.net to provide consistent and reliable computing services in a decentralized environment, meeting the diverse needs of users in terms of data processing and model training.

In this way, io.net not only optimizes resource usage and reduces operating costs, but also improves system flexibility and user control. Users can easily deploy and manage computing tasks of various sizes without worrying about the specific configuration and management details of the underlying resources.

This decentralized computing model, with the powerful functions of Ray and Kubernetes, ensures the efficiency and reliability of the io.net platform when handling complex and large-scale computing tasks.

Privacy

Since the use scenarios of the task scheduling logic of decentralized clusters are much more complex than the cluster logic in the computer room, and given that the transmission of data and computing tasks in the network increases potential security risks, decentralized clusters must also consider security and privacy protection. io.net improves the security and privacy of the network by leveraging the decentralized nature of mesh private network channels. In such a network, since there is no central point of concentration or gateway, the risk of single point failure faced by the network is greatly reduced, and the entire network can still remain operational even if some nodes encounter problems.

Data is transmitted along multiple paths within the mesh network. This design increases the difficulty of tracing the source or destination of the data, thereby enhancing the anonymity of users.

In addition, by adopting technologies such as packet padding and time obfuscation (Traffic Obfuscation), the mesh VPN network can further obscure the pattern of data flow, making it difficult for eavesdroppers to analyze traffic patterns or identify specific users or data flows.

io.net's privacy mechanisms can effectively solve privacy issues because they jointly build a complex and changing data transmission environment that makes it difficult for external observers to capture useful information.

At the same time, the decentralized structure avoids the risk of all data flowing through a single point. This design not only improves the robustness of the system, but also reduces the possibility of being attacked. At the same time, the multi-path transmission of data and the traffic obfuscation strategy together provide an additional layer of protection for users' data transmission and enhance the overall privacy of the io.net network.

Economic model

IO is the native cryptocurrency and protocol token of the io.net network, which can meet the needs of two major entities in the ecosystem: AI startups and developers, and computing power providers.

For AI startups and developers, IO simplifies the payment process for cluster deployment and makes it more convenient; they can also use IOSD Credits pegged to the US dollar to pay for transaction fees for computing tasks on the network. Each model deployed on io.net requires inference through tiny IO coin transactions.

For suppliers, especially those who provide GPU resources, IO Coin ensures that they are fairly rewarded for their resources. Whether it is direct income when the GPU is rented, or passive income from participating in network model inference when idle, IO Coin provides rewards for every contribution of the GPU.

In the io.net ecosystem, IO Coin is not only a medium of payment and incentives, but also a key to governance. It makes every link of model development, training, deployment and application development more transparent and efficient, and ensures mutual benefit and win-win between participants.

In this way, IO Coin not only incentivizes participation and contribution within the ecosystem, but also provides a comprehensive support platform for AI startups and engineers, promoting the development and application of AI technology.

io.net has put a lot of effort into the incentive model to ensure that the entire ecosystem can have a positive cycle. io.net's goal is to establish a direct hourly rate expressed in US dollars for each GPU card in the network. This requires providing a clear, fair and decentralized GPU/CPU resource pricing mechanism.

As a two-sided market, the core key of the incentive model is to solve two major challenges: on the one hand, reduce the high cost of renting GPU/CPU computing power, which is a key indicator for expanding AI and ML computing power demand; on the other hand, solve the shortage of renting GPU nodes in GPU cloud service providers.

Therefore, in terms of design principles, demand considerations include competitor pricing and availability to provide competitive and attractive options in the market, and adjust pricing during peak hours and when resources are tight.

In terms of computing power supply, io.net focuses on two key markets: gamers and crypto GPU miners. Gamers have high-end hardware and fast Internet connections, but usually only have one GPU card; while crypto GPU miners have a large number of GPU resources, although they may face limitations in Internet connection speed and storage space.

Therefore, the computing power pricing model includes multi-dimensional factors such as hardware performance, Internet bandwidth, competitor pricing, supply availability, peak hour adjustments, committed pricing, and location differences. In addition, it is also necessary to consider the optimal profit when the hardware is used for other proof-of-work crypto mining.

In the future, io.net will further provide a set of fully decentralized pricing solutions and create a benchmarking tool similar to speedtest.net for miner hardware to create a fully decentralized, fair and transparent market.

How to participate

io.net launched the Ignition event, which is the first phase of the io.net community incentive program, with the aim of accelerating the growth of the IO network.

The plan has a total of three reward pools, which are completely independent of each other.

These three reward pools are completely independent. Participants can receive rewards from these three reward pools separately, and there is no need to associate the same wallet with each reward pool.

GPU Node Rewards

For nodes that have already been connected, the airdrop points are calculated from the end of the event on November 4, 2023 - April 25, 2024. At the end of the Ignition event, all airdrop points earned by users will be converted into airdrop rewards.

The airdrop points will be considered in four aspects:

A. Time of employment (Ratio of Job Hours Done - RJD) The total time of employment from November 4, 2023 to the end of the event.

B. Bandwidth (Bandwidth - BW) The bandwidth of the node is classified according to the bandwidth speed range:

Low speed: download speed 100MB/second, upload speed 75MB/second.

Medium speed: download speed 400MB/second, upload speed 300MB/second.

High speed: download speed 800MB/second.

C. GPU model (GPU Model - GM) It will be determined according to the GPU model, and the higher the performance of the GPU, the more points it will get.

D. Successful operation time (Uptime - UT) The total successful operation time from the access to Worker on November 4, 2023 to the end of the activity.

It is worth noting that the airdrop points are expected to be available for users to view around April 1, 2024.

Galaxy Mission Rewards (Galxe)

Galaxy Mission Connection Address https://galxe.com/io.net/campaign/GCD5ot4oXPAt

Discord Role Rewards

This reward will be supervised by the community management team of io.net and requires users to submit the correct Solana wallet address in Discord.

Users will obtain the corresponding Airdrop Tier Role level based on their contribution, activity, content creation and other activities.

Summary

In general, io.net and similar decentralized AI computing platforms are opening a new chapter in AI computing, although they also face challenges in the complexity of technical implementation, network stability and data security. But io.net has the potential to completely change the AI business model. I believe that as these technologies mature and the computing power community expands, decentralized AI computing power may become a key force in promoting AI innovation and popularization.

JinseFinance

JinseFinance

JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance JinseFinance

JinseFinance Coinlive

Coinlive